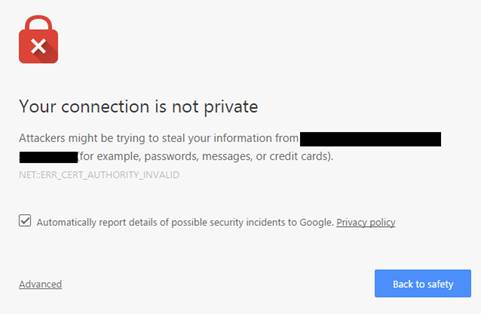

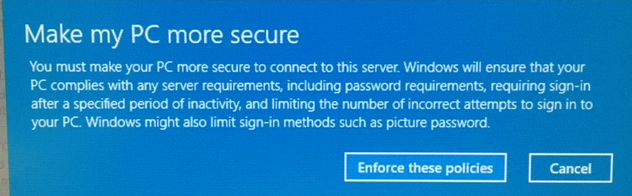

After installing Windows 10, I decided I wanted to try out the Mail Desktop App. I added my Exchange account in the Settings->Accounts-> Add account. After adding my credentials, I got this message:

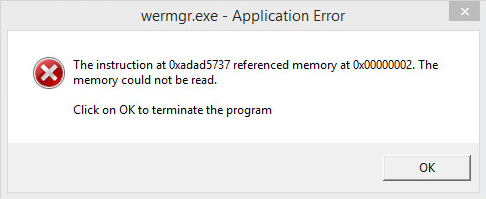

This caused the Windows 10 lock out policy to be inherited from the policy that is a part of Exchange Activsync, which locks the device after one or three minutes (depending on the policies set up for Activsync).

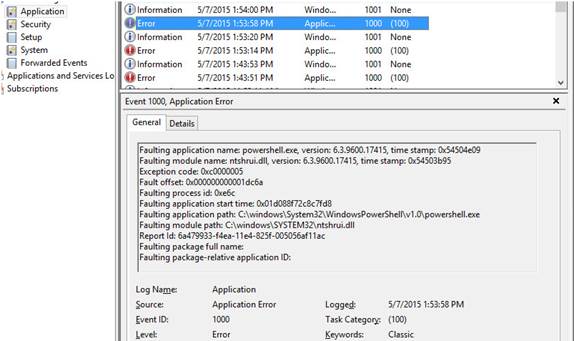

By removing the Exchange account from the Windows 10 Mail app, it also removed the Activesync enforcement of lockout and hence the lockout times reverted to being controlled by the power manager application.