A customer was setting up Bitlocker encryption on laptops so that they could be checked out of the office. They wanted to have Bitlocker startup keys created on one removable flash drive and then be able to copy the required key to another flash drive. When a user needed to check out a laptop, they would also be given a flash drive to be able to start up any of the laptops with Bitlocker.

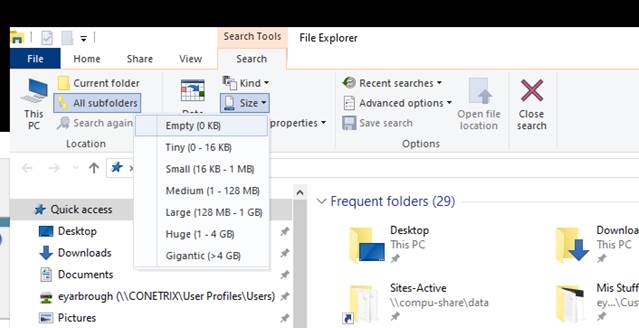

The customer was saving the startup keys correctly through BitLocker, but could not see them on the removable flash drive through Windows Explorer. Although they had "show hidden files" enabled, they needed to uncheck the view options for "Hide protected operating system files". This allowed the customer to see the startup keys to be able to copy to other removable flash drives.